I’m interested in designing tools to help people with reasoning and critical thinking, particularly about social issues. This substack series is about insights from different disciplines needed to solve this problem. I expect to discuss psychology, cognitive science and AI quite a lot.

This first post is about ethical automation - what I think it needs to be, and what the challenges are. I’m particularly interested in ethical problems involving fairness and equality, as well as “common good” (such as environment). Ethical automation overlaps with ethical AI, but includes “dumb” IT systems, infrastructure design and organisations.

The main points in this post are as follows:

Ethical design includes determining what tasks should be automated and what should not.

Designing an ethically automated system needs a translation of ethical values into exact policies. The system then needs to act according to these policies.

Ethical automated systems also need to help humans to make ethical decisions. This can be directly in the form of interactive decision support, or indirectly through design.

What tasks are suitable for automation?

It seems reasonable to automate work that humans do badly. Examples include data handling and analysis, as well as difficult or dangerous physical work. On the other hand, it seems appropriate that work requiring human experience should only be partially automated, if at all. It’s about human-machine complementarity. For example, if decisions are being made about vulnerable people (such as mental health treatment, or social security benefits), this needs human input. It is also important that these decisions can be challenged, and that the decision-making process is transparent. (The EU GDPR gives people a right not to be subject to automated decisions).

One useful form of partial automation is interactive decision support. This kind of system recommends a decision (and ideally explains why), but does not execute it. Humans can then challenge the recommended decision and if necessary, make their own decision instead. But even in those cases, some automation may be necessary to avoid errors and huge backlogs. Therefore, a decision support system would rely on fully automated processes in the background, particularly involving data handling.

Full automation means that decisions and actions take place without human intervention, although they may be human-supervised. A fully automated system may be interrupted by a supervisor if things go wrong, or the system itself may stop and flag up some error or exception that it cannot solve. Identifying such exceptional or unusual situations is an important capability where AI techniques can play a role (more on that in future blog posts).

What is ethical automation?

It is clear that ethical automated systems should act within ethical requirements. But it is also important that they support humans to make ethical decisions. For example, they should not cause confusion and cognitive overload, but allow clear thinking. For a decision support system, the options that it recommends must be ethical. It can also be a requirement for it to explain why its recommendations are ethical and what are some alternative options (providing transparency).

Ethical automation also includes design requirements for computational infrastructure. Examples include the following:

automated checks to ensure information is accurate,

implementing fair data reliability: for example, data on the circumstances of vulnerable benefit claimants needs to have the same level of reliable processing and security as business data.

Translating ethical requirements

The automated system has to be aligned with values that matter to the decision-makers. These values can include “fairness”, “equality of opportunities”, “environmental sustainability”, “privacy” and “autonomy” (among others). As an example, an ethical businesses can have a mission statement and a code of conduct.

Putting these values into practice requires their translation into automated processes. However, values are often imprecise and have differing interpretations. One approach that addresses these challenges is the “Design for Values” methodology, as explained in the book “Responsible AI” by Virginia Dignum. This uses an intermediate level called “norms”. These are agreed interpretations of values that are sufficiently precise to be applied to technology design. For example, they may be policies or procedures. Of-course they will not include all the meanings and nuances of human values, but that is why full automation is usually not the right approach.

What kind of values can be translated?

Some values are relatively easy to translate. For example, environmental sustainability (an ethical value) can be translated into policies that require business travel to use alternatives to flying for domestic journeys, unless there are exceptional circumstances (such as unavailability of trains). Therefore, a journey planning system would only recommend journeys that satisfy these requirements.

A more difficult example is when a company believes in equal opportunities for people who suffered poverty or serious illness in the past, and they don’t want to exclude people who have a bad credit record as a result. These values could be put into practice by not running credit checks for hiring, or ensuring that a poor credit rating alone does not influence the hiring decision. Of-course, security risks need to be considered, but there may be fairer ways of mitigating them.

Helping humans to make ethical decisions

An ethical automated system should include human input when required, and should help humans to make ethical decisions. Such help can be provided directly in the form of ethical recommendations, along with explanations. Help can also be indirect in the form of an environment that supports clear reasoning and avoids cognitive overload.

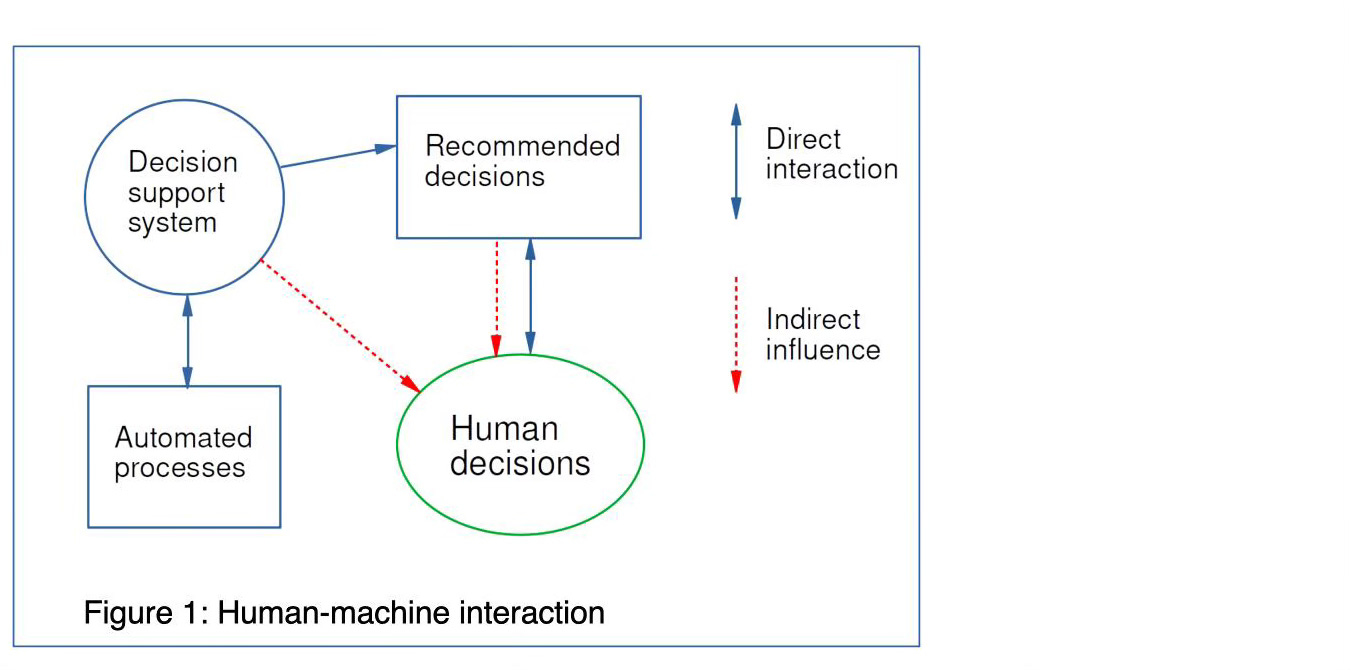

These interactions are shown very roughly in Figure 1. Direct interactions are formally designed as part of the system and are shown in blue. Indirect effects are shown as red dashed arrows. The “automated processes” box includes data infrastructure which must also be ethically aligned. These processes also affect the decision support system, and will therefore also play an indirect role in what the user sees.

A supportive cognitive environment can be implemented using ethical user experience (UX) design. (See for example, The Ethical Design Handbook, by Trine Falbe and others).

But there are still difficult challenges which need an interdisciplinary approach. Even if decision makers agree with ethical principles, they often experience conflicting demands. These can include pressure to cut corners (e.g. living wage). Ethical actions might also cause additional work or complexity (such as bureaucracy). Furthermore, the automated system itself may generate additional complexity (and even more work), making it difficult to think clearly about ethical challenges.

The inability to put values into action is called the value-action gap. Ethical technology should help to reduce this gap, not further increase it. Some desirable features include:

suggesting options that the user might not have thought about, which address the conflicting pressures as well as the ethical requirements.

drawing attention to information that tends to be hidden, but is important for ethical decisions. (e.g. lived experience of people in real-life).

allowing users the freedom to override recommended decisions (and to go offline), or to create their own options and to ask the system to weigh them up in comparison to a recommended one.

When considering these features, I have been thinking about cognitive biases which are associated with value-action gaps. I will return to this topics in future blog posts.

Key take-aways

Ethical automation requires human-machine complementarity, where tasks involving human lived experience (such as social care) require humans, but tasks that humans do badly should be more fully automated to avoid error and inefficiencies.

Ethical values can be partly translated into exact policies. These in turn can be applied to the design of automated systems (approximately following the “Design for Values” approach). The resulting technology should then comply with ethical values.

Decision support systems have potential to help people make ethical decisions and to resist tendencies to choose the easiest but less ethical options (“value-action gap”). This challenge can be addressed by drawing attention to important issues that tend to be forgotten when under pressure, and to raise awareness about alternative options that might not have been considered.

Interactive systems rely on infrastructure with fully automated computing and data management processes. Such processes also need to be aligned with ethical requirements, particularly involving security, privacy and reliability.

Later in this blogging series, I will discuss relevant concepts in psychology, cognitive science and AI.

Note: I am not using generative AI for writing. This post is human-produced.