In the last post, I talked about AI agents, and how they can have varying levels of complexity. In this post, I will discuss agents with a complex internal structure involving internal representations of the world. This kind of internal structure is called a cognitive architecture and has origins in cognitive science, which I will first talk about. A key takeaway is that cognitive science has relevance for designing transparent and comprehensible AI systems.

Cognitive science

According to Wikipedia, cognitive science is “is the interdisciplinary, scientific study of the mind and its processes”. Its development was part of the “cognitive revolution”, which emphasised the need to study mental experience and thoughts. Thinking had previously been neglected in the psychological approach of “behaviourism”, which studied external behaviour only.

I find the definition from John's Hopkins University to be very specific in this regard: “Cognitive science is the study of the human mind and brain, focusing on how the mind represents and manipulates knowledge and how mental representations and processes are realised in the brain”.

Cognitive science overlaps with psychology but emphasises processes and representations that can be modelled computationally. For this reason, it is particularly relevant for designing agent internal structure. The processes of perception, reasoning, decision and planning are cognitive processes. The term “cognitive agent” can be used to differentiate the more complex agents from simple reflex agents.

Computational models and simulation

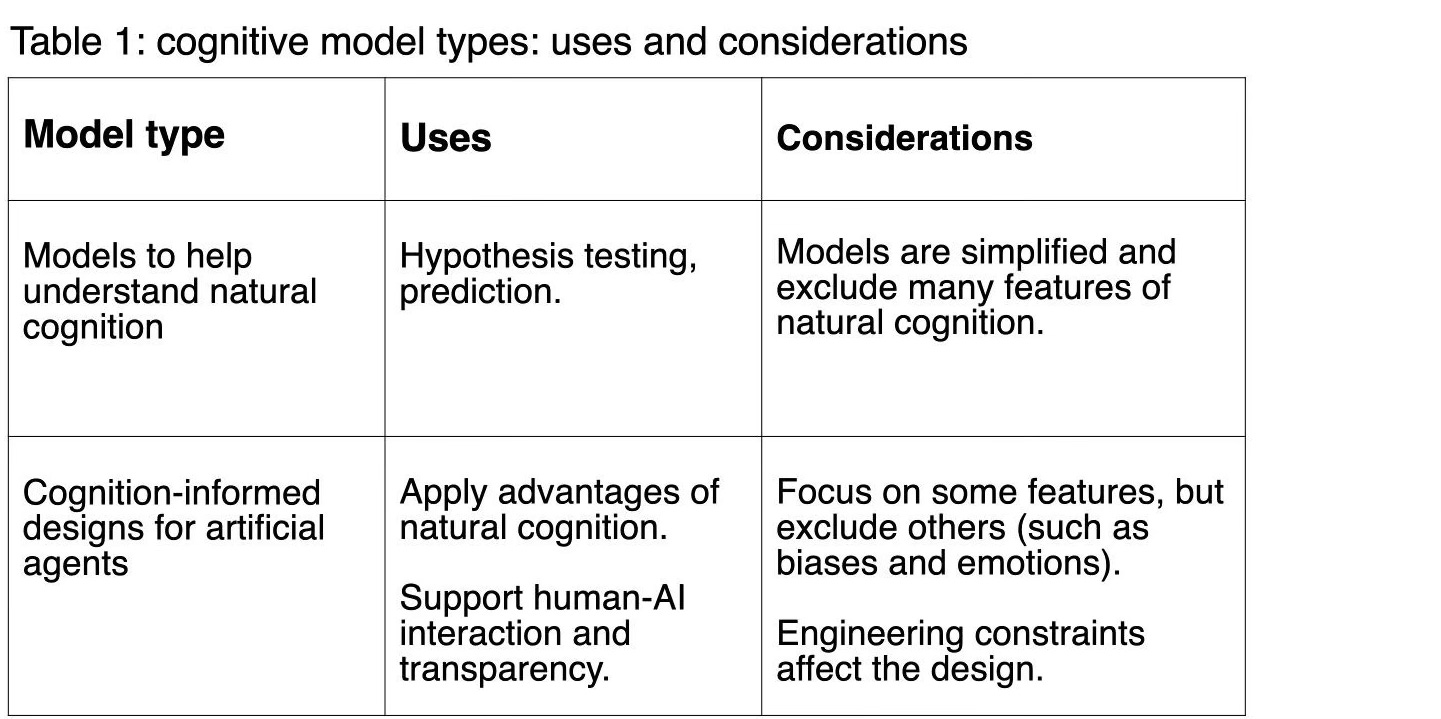

Computational cognitive models can be used for two purposes:

Understanding natural cognition: For example, a theory of vision could be translated into a computational process, which could be tested as part of an agent in a simulation.

Designing artificial cognitive systems: theories of cognition could also be applied to robots or agents that are intended for real-world use.

For both cases, agent-based simulation is a useful methodology and involves simulated agents interacting with a virtual world. In the example in (1) above, the theory of vision would be the method by which the agent recognises objects in its synthetic environment. Its accuracy and mistakes could then be checked against real world data which could have been produced by human subjects recognising objects. A simulation can also generate new predictions about natural cognition, which could be compared with evidence.

Simulation is not reality. Computational models can provide useful insights, but they are very simplified, and researchers who use them are not necessarily saying that cognition is computation. (There is much debate on computation and cognition that goes beyond the current discussion).

The two uses of cognitive models are summarised in Table 1 below.

Cognition-informed design of AI agents

Computational cognitive models and simulation can also be used for the design of AI agents (point 2 above). See also Table 1. If we use a cognitive model to design an automated decision system, this would not be a direct copy of human decision-making because we want to avoid cognitive biases and other errors. However, applying some features of human decision-making can help to make automated decisions transparent and comprehensible to humans. For example, processes such as argumentation and hypothetical reasoning in human decision-making have relevance for AI systems. (This is an important area that needs further discussion, and I plan to return to it in future posts).

Engineering constraints can also affect design decisions, so that they can diverge significantly from human cognitive models. Integrating cognitive modelling with software and hardware engineering is an ongoing research topic (see for example, Cognitive Robotics).

Cognitive architecture

A cognitive architecture is a kind of cognitive model that specifies the internal structure of a cognitive agent. The concept of “architecture” is important because it integrates the various functions of cognition into a coherent system. Such integration is necessary for building fully autonomous agents and for the study of interactions between different components of natural cognition, such as reasoning and motivation. Two well-known examples are Soar and ACT-R (Adaptive Control of Thought—Rational). Many others exist. See for example, this survey from 2020.

Not all cognitive models are called “architectures”. They could, for instance, be focused on a particular cognitive function, such as language or vision, and therefore not suitable for mapping to a complete agent system.

Although architectures are about connecting components, they often focus on a theory or system of concepts. For example, CLARION emphasises the difference between explicit and implicit processes: explicit processes are easily explained (such as remembering events), while implicit processes are automatic and unconscious (such as how to do certain tasks). The “Standard Model of the Mind” is a reference framework based on commonly agreed concepts that can be referenced by cognitive architecture researchers.

Levels of abstraction

Cognitive architectures may be purely conceptual and not sufficiently defined for computational implementation. Architectures that are intended as computational models can be defined at different levels of abstraction. For example, in the classic book Vision, David Marr defined three levels that can be applied to a cognitive architecture as follows:

Computational theory: this specifies the functions of cognition – what are the components and what are their inputs and outputs? Most architecture diagrams tend to be on this level.

Representation and algorithm: this specifies how each component accepts its input and generate its output. For example, representations may include symbolic logic or neural networks; algorithms may include inference algorithms (for logical deductions) or learning algorithms.

Implementation: this specifies the hardware, along with any supporting software and configurations (e.g. simulation software, physical robot or IT infrastructure).

For building AI agents, these levels can help with simulation and rapid-prototyping. For example, level 1 may be defined in detail while the deeper levels are provisionally filled in with simplified processes (not involving complex algorithms or representations). This allows for exploratory simulation at the highest level initially, which can then be gradually refined through experimentation.

Environment and requirements

When designing an AI agent, the environment of the agent needs to be considered. This defines the situations and events that the agent encounters. It is also important to define requirements that the agent must satisfy in a given environment. For example, this might include acting on behalf of human values sufficiently accurately so that humans can delegate tasks to the agent in the given environment. Matching agent architectures with environments provides a way of developing specialist AI agents.

Key take-aways

Cognitive science emphasises computational models of mental processes, as well as the importance of mental experience (in contrast to behaviourism).

Computational cognitive models can be used for two purposes: study of natural cognition, and design of AI agents that are informed by cognitive models.

Cognition-informed design is not about building copies of human cognition, but about applying features that support human-AI interaction and transparency.

Cognitive architectures are models which specify the interaction between the different components of cognition. Levels of abstraction in cognitive architectures help to manage complexity in the design and prototyping of AI agents.

Note

This blog post is human produced.