In the last blog post I talked about biases that affect ethical decision-making and the two types of thinking called “System 1” and “System 2” explained by Kahneman in the book “Thinking Fast and Slow”. This post is about mitigation strategies, and how they might be translated into technology design. I will not prescribe technical solutions, but instead identify strategies and directions for future research.

Key points:

Known mitigations and countermeasures exist for many cognitive biases.

Designs should reduce cognitive effort required to mitigate biases.

Specific tools include checklists, argument mapping, and decision support systems that provide explanations and feedback.

Biases and mitigation strategies

Cognitive bias mitigation is a subject of active research, particularly in medicine and management (see for example, this BMJ article on cognitive de-biasing). Countermeasures can be understood as a kind of mitigation that involves active efforts to de-bias one’s thinking.

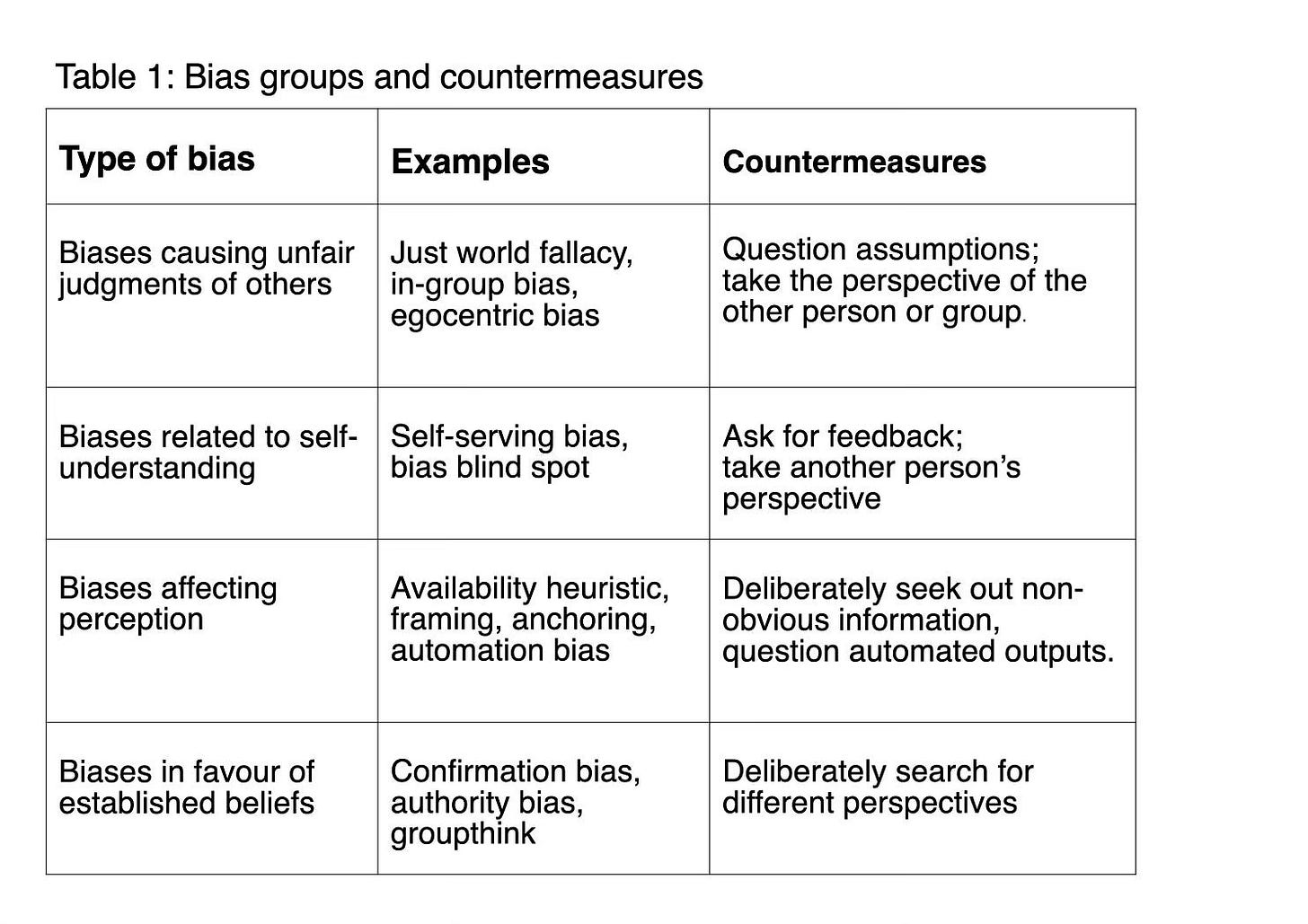

I will focus on the bias categories discussed in the last post. The categories are listed again in the table below, along with recommended countermeasures.

I have used the book “The Biased Brain” by Bo Dennett as a reference, since it includes countermeasures for each bias. The most common ones are: taking a different perspective, searching for non-obvious information, asking for feedback, questioning assumptions, and questioning automated output. In addition to countering specific biases, general strategies for mitigation include metacognition, emotion regulation and critical thinking (which I hope to cover in future posts).

Translating mitigations into technology design

Each of the countermeasures in Table 1 takes mental effort. Technology should reduce the effort required, not increase it. Some design considerations are outlined below.

1. Designing to reduce stress and cognitive overload

The general principle of slowing down and reducing stress is important and is emphasised in bias mitigation research. Biased decisions become more frequent in high pressure situations because System 2 needs time and energy than System 1. To promote System 2 thinking, technology needs to reduce cognitive load. This is an active topic in the UX design community (see for example, this article on tips to reduce cognitive load).

2. Preparing for biases in advance

Many decision-making resources can be prepared in advance to make bias mitigation less effortful. Awareness of other perspectives can be supported by ensuring that the necessary data sources are readily available, so that the user can check them before making a decision. For example, if a decision is being made about social housing needs, a housing application form would be structured according to the perspective of the housing provider. To avoid biases and wrong assumptions about the applicant, the decision-maker could be prompted to explore other factors that the application form does not cover. For example, the applicant can experience lack of safety because of high crime in the neighbourhood. If data on crime levels is easily accessible, it may be taken into account when making the decision.

3. Checklists

For safety-critical decisions such as in medicine, there may be a prescribed workflow where a user is directed though a checklist before making a decision. The checklist may be designed to counteract known biases. This is the approach recommended by Atul Gawande in the book “The Checklist Manifesto”. Checklists can be particularly helpful in situations where important information is hidden and it needs to be made visible. The decision-maker will tend to focus on visible data (due to availability bias) and may not have time to deliberately seek out non-obvious details.

4. Argument mapping

Making arguments explicit can help to raise awareness of one’s own biases and unquestioned assumptions. Argument mapping provides a visualisation of an argument, showing premises and conclusion as a tree diagram. The technique tends to be used in debates about policy, or in educational settings. Argument maps can support reflective and critical thinking because they help users to visualise their own reasons for their preferred solution and look at alternative arguments that can lead to different conclusions. See for example, this recent discussion paper from the Rebooting Democracy group at Southampton University.

5. Decision Support Systems

Semi-automated decision support systems were introduced in the first blog post on Ethical Automation. Such systems determine dynamically what options are possible and which one is best, given that a user states their goal. An example is travel planning. For medical decision support, the goal may be a correct diagnosis or best treatment.

To counteract biases, a decision support workflow can have the following steps:

generate a recommended option and explain why it appears to be best.

generate alternative options and explain why they are not so good,

allow users to generate their own options instead of the ones given. Steps 5 and 6 then apply.

ask the user to give a reason for their generated options (this should be optional)

if applicable, provide feedback on user-generated options.

After steps 1 or 2, the user may select one of the given options, but if they disagree with all of them, they may want to suggest their own. They would effectively be asking “why can’t we do X instead?”. There are opportunities here for the system to provide feedback on possible wrong assumptions and to raise awareness of hidden information or alternative perspectives.

Of-course it is also necessary for the user to question the recommendations of the automated system (to counteract automation bias). This is particularly important if generative AI tools are used to produce the recommendations and explanations, since they need to be fact-checked and can be dangerous for safety-critical decisions if there is complete reliance on them. Generative AI may still be useful for other purposes, however. I will return to this in later posts.

Notes

The blog post is human produced. I have not used any generative AI tool.